Tability is a cheatcode for goal-driven teams. Set perfect OKRs with AI, stay focused on the work that matters.

What are Performance Testing Team OKRs?

The Objective and Key Results (OKR) framework is a simple goal-setting methodology that was introduced at Intel by Andy Grove in the 70s. It became popular after John Doerr introduced it to Google in the 90s, and it's now used by teams of all sizes to set and track ambitious goals at scale.

Crafting effective OKRs can be challenging, particularly for beginners. Emphasizing outcomes rather than projects should be the core of your planning.

We have a collection of OKRs examples for Performance Testing Team to give you some inspiration. You can use any of the templates below as a starting point for your OKRs.

If you want to learn more about the framework, you can read our OKR guide online.

The best tools for writing perfect Performance Testing Team OKRs

Here are 2 tools that can help you draft your OKRs in no time.

Tability AI: to generate OKRs based on a prompt

Tability AI allows you to describe your goals in a prompt, and generate a fully editable OKR template in seconds.

- 1. Create a Tability account

- 2. Click on the Generate goals using AI

- 3. Describe your goals in a prompt

- 4. Get your fully editable OKR template

- 5. Publish to start tracking progress and get automated OKR dashboards

Watch the video below to see it in action 👇

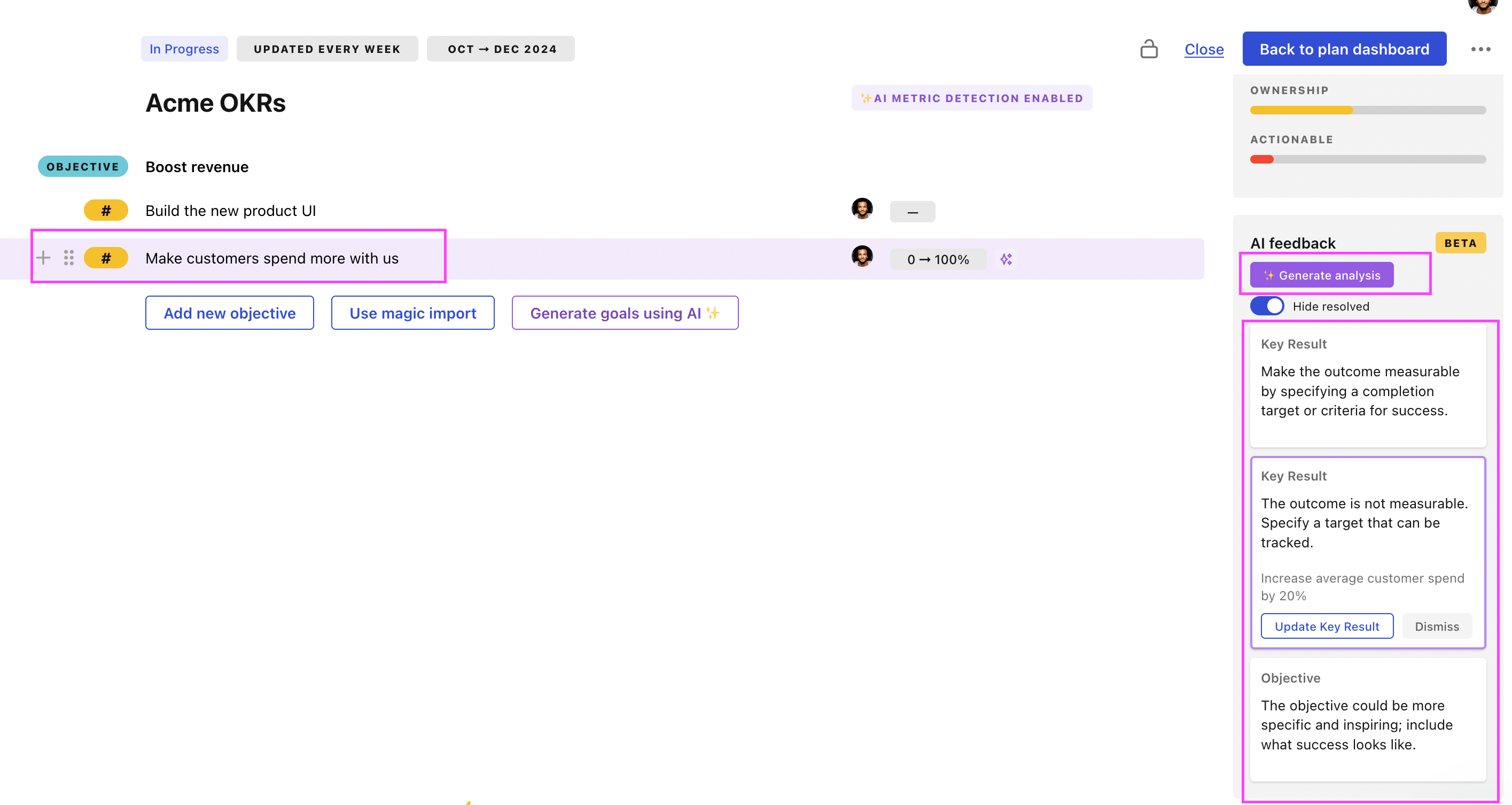

Tability Feedback: to improve existing OKRs

You can use Tability's AI feedback to improve your OKRs if you already have existing goals.

- 1. Create your Tability account

- 2. Add your existing OKRs (you can import them from a spreadsheet)

- 3. Click on Generate analysis

- 4. Review the suggestions and decide to accept or dismiss them

- 5. Publish to start tracking progress and get automated OKR dashboards

Tability will scan your OKRs and offer different suggestions to improve them. This can range from a small rewrite of a statement to make it clearer to a complete rewrite of the entire OKR.

Performance Testing Team OKRs examples

You'll find below a list of Objectives and Key Results templates for Performance Testing Team. We also included strategic projects for each template to make it easier to understand the difference between key results and projects.

Hope you'll find this helpful!

OKRs to enhance performance testing for v2 services

ObjectiveEnhance performance testing for v2 services

KRImprove system ability to handle peak load by 30%

Optimize current system code for better efficiency

Implement load balancing techniques across the servers

Increase server capacity to handle increased load

KRIdentify and reduce service response time by 20%

Analyze current service response times

Implement solutions to enhance service speed by 20%

Identify bottlenecks and inefficiencies in service delivery

KRAchieve 100% test coverage for all v2 services

Implement and run newly developed tests

Identify and create additional tests needed

Review current test coverage for all v2 services

OKRs to successfully migrate legacy DWH postgres db into the data lake using Kafka

ObjectiveSuccessfully migrate legacy DWH postgres db into the data lake using Kafka

KRAchieve 80% completion of data migration while ensuring data validation

Monitor progress regularly to achieve 80% completion promptly

Establish a detailed plan for the data migration process

Implement a robust data validation system to ensure accuracy

KRConduct performance testing and optimization ensuring no major post-migration issues

Develop a comprehensive performance testing plan post-migration

Execute tests to validate performance metrics

Analyze test results to optimize system performance

KRDevelop a detailed migration plan with respective teams by the third week

Outline detailed migration steps with identified teams

Identify relevant teams for migration plan development

Finalize and share migration plan by third week

OKRs to achieve unprecedented effectiveness and success in testing methods

ObjectiveAchieve unprecedented effectiveness and success in testing methods

KRImplement a testing system to improve accuracy by 30%

Develop a testing process based on these inaccuracies

Incorporate feedback loop to continually enhance the system

Identify existing inaccuracies in the current system

KRConduct 2 training sessions weekly to enhance team members' testing skills

Develop relevant testing skill modules for team training

Send reminders and materials for scheduled sessions to team

Organize weekly schedule to slot in two training sessions

KRMinimize error percentage to below 5% via rigorous repeated testing initiatives

Review and continuously improve testing methodologies

Implement repetitive testing for all features

Develop a comprehensive software testing protocol

OKRs to successfully complete the GPU component

ObjectiveSuccessfully complete the GPU component

KRReduce the number of performance issues found during testing by 50%

Integrate automated testing in the development process

Implement thorough code reviews before initiating tests

Increase training sessions on effective coding practices

KRQuality review passed in all 3 stages of the GPU component lifecycle

Update GPU component lifecycle quality control procedures

Discuss the successful review outcome with the team

Document all observations during GPU component lifecycle stages

KRAchieve 80% project milestone completions on GPU component development by the period end

Assign experienced team for GPU component development

Regularly track and review progress of project completion

Prioritize daily tasks towards the project's milestones

OKRs to develop robust performance metrics for the new enterprise API

ObjectiveDevelop robust performance metrics for the new enterprise API

KRDeliver detailed API metrics report demonstrating user engagement and API performance

Identify key API metrics to measure performance and user engagement

Analyze and compile API usage data into a report

Present and discuss metrics report to the team

KREstablish three key performance indicators showcasing API functionality by Q2

Launch the key performance indicators

Develop measurable criteria for each selected feature

Identify primary features to assess regarding API functionality

KRAchieve 95% accuracy in metrics predictions testing by end of quarter

Develop comprehensive understanding of metrics prediction algorithms

Perform consistent testing on prediction models

Regularly adjust algorithms based on testing results

Performance Testing Team OKR best practices

Generally speaking, your objectives should be ambitious yet achievable, and your key results should be measurable and time-bound (using the SMART framework can be helpful). It is also recommended to list strategic initiatives under your key results, as it'll help you avoid the common mistake of listing projects in your KRs.

Here are a couple of best practices extracted from our OKR implementation guide 👇

Tip #1: Limit the number of key results

The #1 role of OKRs is to help you and your team focus on what really matters. Business-as-usual activities will still be happening, but you do not need to track your entire roadmap in the OKRs.

We recommend having 3-4 objectives, and 3-4 key results per objective. A platform like Tability can run audits on your data to help you identify the plans that have too many goals.

Tip #2: Commit to weekly OKR check-ins

Don't fall into the set-and-forget trap. It is important to adopt a weekly check-in process to get the full value of your OKRs and make your strategy agile – otherwise this is nothing more than a reporting exercise.

Being able to see trends for your key results will also keep yourself honest.

Tip #3: No more than 2 yellow statuses in a row

Yes, this is another tip for goal-tracking instead of goal-setting (but you'll get plenty of OKR examples above). But, once you have your goals defined, it will be your ability to keep the right sense of urgency that will make the difference.

As a rule of thumb, it's best to avoid having more than 2 yellow/at risk statuses in a row.

Make a call on the 3rd update. You should be either back on track, or off track. This sounds harsh but it's the best way to signal risks early enough to fix things.

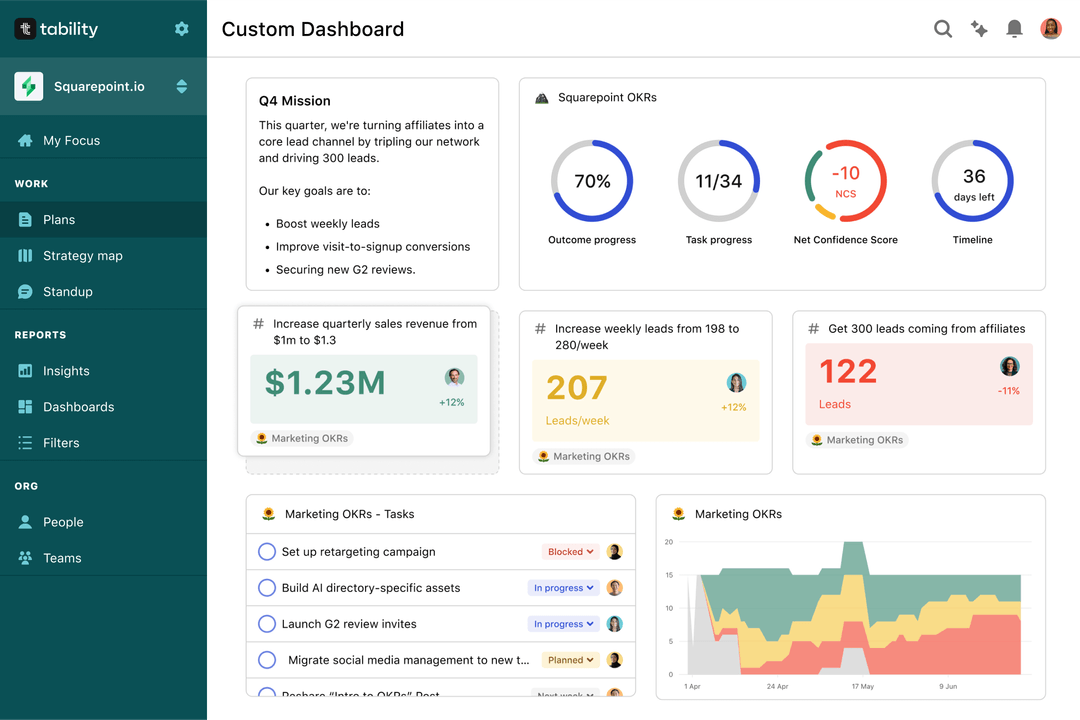

Save hours with automated Performance Testing Team OKR dashboards

The rules of OKRs are simple. Quarterly OKRs should be tracked weekly, and yearly OKRs should be tracked monthly. Reviewing progress periodically has several advantages:

- It brings the goals back to the top of the mind

- It will highlight poorly set OKRs

- It will surface execution risks

- It improves transparency and accountability

Spreadsheets are enough to get started. Then, once you need to scale you can use Tability to save time with automated OKR dashboards, data connectors, and actionable insights.

How to get Tability dashboards:

- 1. Create a Tability account

- 2. Use the importers to add your OKRs (works with any spreadsheet or doc)

- 3. Publish your OKR plan

That's it! Tability will instantly get access to 10+ dashboards to monitor progress, visualise trends, and identify risks early.

More Performance Testing Team OKR templates

We have more templates to help you draft your team goals and OKRs.

OKRs to enhance proficiency in handling and developing AWS

OKRs to improve user retention rate and reduce churn

OKRs to enhance English reading proficiency and comprehension

OKRs to improve team responsiveness

OKRs to improve SIEM visibility through diversified log monitoring

OKRs to enhance Webhooks Experience and Address Technical Debt