Tability is a cheatcode for goal-driven teams. Set perfect OKRs with AI, stay focused on the work that matters.

What are Testers OKRs?

The OKR acronym stands for Objectives and Key Results. It's a goal-setting framework that was introduced at Intel by Andy Grove in the 70s, and it became popular after John Doerr introduced it to Google in the 90s. OKRs helps teams has a shared language to set ambitious goals and track progress towards them.

OKRs are quickly gaining popularity as a goal-setting framework. But, it's not always easy to know how to write your goals, especially if it's your first time using OKRs.

To aid you in setting your goals, we have compiled a collection of OKR examples customized for Testers. Take a look at the templates below for inspiration and guidance.

If you want to learn more about the framework, you can read our OKR guide online.

The best tools for writing perfect Testers OKRs

Here are 2 tools that can help you draft your OKRs in no time.

Tability AI: to generate OKRs based on a prompt

Tability AI allows you to describe your goals in a prompt, and generate a fully editable OKR template in seconds.

- 1. Create a Tability account

- 2. Click on the Generate goals using AI

- 3. Describe your goals in a prompt

- 4. Get your fully editable OKR template

- 5. Publish to start tracking progress and get automated OKR dashboards

Watch the video below to see it in action 👇

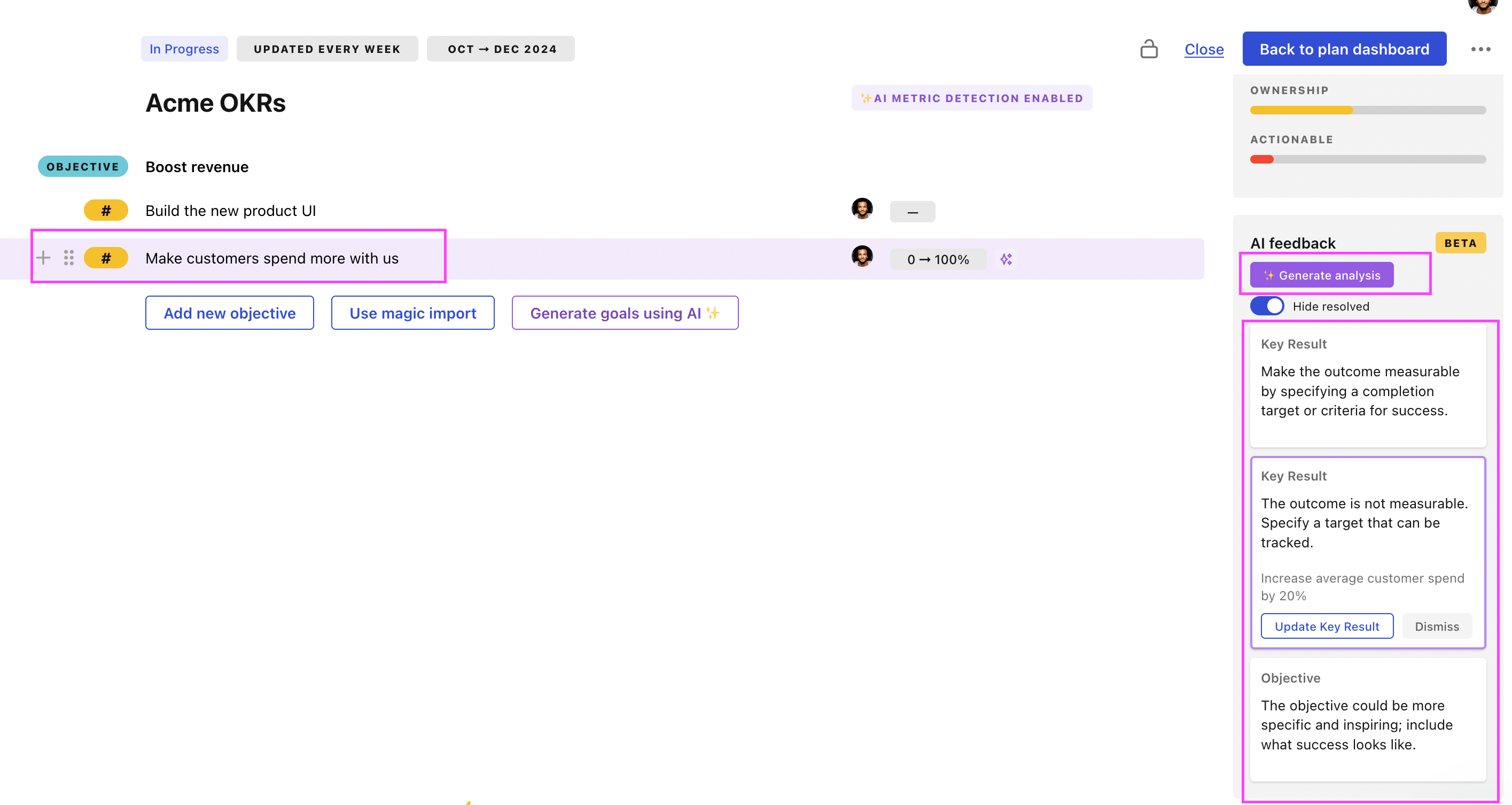

Tability Feedback: to improve existing OKRs

You can use Tability's AI feedback to improve your OKRs if you already have existing goals.

- 1. Create your Tability account

- 2. Add your existing OKRs (you can import them from a spreadsheet)

- 3. Click on Generate analysis

- 4. Review the suggestions and decide to accept or dismiss them

- 5. Publish to start tracking progress and get automated OKR dashboards

Tability will scan your OKRs and offer different suggestions to improve them. This can range from a small rewrite of a statement to make it clearer to a complete rewrite of the entire OKR.

Testers OKRs examples

We've added many examples of Testers Objectives and Key Results, but we did not stop there. Understanding the difference between OKRs and projects is important, so we also added examples of strategic initiatives that relate to the OKRs.

Hope you'll find this helpful!

OKRs to develop and launch a new API for the CAD company

ObjectiveDevelop and launch a new API for the CAD company

KRReceive positive feedback and testimonials from beta testers and early adopters

Share positive feedback and testimonials on social media platforms and company website

Offer incentives, such as discounts or freebies, to encourage beta testers and early adopters to provide feedback and testimonials

Create a feedback form to collect positive experiences and testimonials from users

Send personalized email to beta testers and early adopters requesting feedback and testimonials

KRComplete the API design and documentation, incorporating all required functionalities

KRSuccessfully implement API integration with multiple CAD software applications

Provide training and support to users for effectively utilizing the integrated APIs

Test the API integration with each CAD software application to ensure seamless functionality

Develop a comprehensive documentation for the API integration process with each CAD software application

Identify and research the CAD software applications that require API integration

KRAchieve a minimum of 95% API uptime during the testing and deployment phase

OKRs to create a comprehensive and applicable certification exam

ObjectiveCreate a comprehensive and applicable certification exam

KRDefine 100% of exam components and criteria within 6 weeks

Finalize the exam structure and question types

Develop clear marking criteria for each component

Identify the key subject areas for the exam

KRDevelop 200 high-quality exam questions and answers

Research and outline relevant topics for questions

Create comprehensive answers for each question

Write 200 unique, high-quality exam questions

KRPilot exam with 30 volunteer testers and achieve 85% satisfaction rate

Analyze feedback and achieve 85% satisfaction rate

Identify and enroll 30 volunteers for the pilot exam

Administer pilot exam to volunteer testers

OKRs to improve performance testing for V2 services

ObjectiveImprove performance testing for V2 services

KRIncrease the successful pass rate of performance tests to 95% from existing results

Develop and implement a targeted improvement plan for testing

Conduct regular training sessions for performance test takers

Continuously review and update testing techniques

KRDevelop a comprehensive test strategy addressing all aspects of V2 services by week 4

Develop a detailed plan for testing each aspect

Schedule testing stages within first 4 weeks

Identify key aspects and potential risks of V2 services

KRReduce the average run-time for performance tests by 20% compared to current timings

Implement more efficient testing algorithms and techniques

Upgrade testing hardware or software to improve speed

Identify and eliminate bottlenecks in the current performance test process

OKRs to enhance release quality and ensure punctuality

ObjectiveEnhance release quality and ensure punctuality

KRIncrease Beta testing completion rate to 100% before official release

Provide comprehensive support to facilitate timely completion

Streamline beta testing process for better efficiency and time management

Implement a reward system to motivate beta testers

KRAchieve 100% target release dates adhered to across all projects

Regularly monitor and update progress towards release dates

Establish firm, achievable deadlines for all project stages

Ensure effective communication within the project team

KRDecrease post-release bugs by 30% compared to previous quarter

Implement stricter code review processes

Increase automated testing routines

Invest in ongoing team skill training

OKRs to enhance the efficiency and accuracy of our testing procedure

ObjectiveEnhance the efficiency and accuracy of our testing procedure

KRImplement training workshops resulting in 100% testers upskilled in advanced testing methods

Schedule and conduct training workshops for all testers

Develop comprehensive workshop materials on advanced testing methods

Evaluate testers' skills post-workshop to ensure progress

KRAchieve a 15% reduction in testing time through process optimization and procedural changes

Evaluate current testing process for areas of inefficiency

Implement automation software to expedite testing

Train staff in new optimized testing procedures

KRReduce the testing errors by 25% through improved automation and techniques

OKRs to improve testing efficiency through AI integration

ObjectiveImprove testing efficiency through AI integration

KRReduce software bugs by 25% with AI algorithms

Train AI algorithms to identify and fix recurring software bugs

Invest in AI-based debugging tools for code review and error detection

Integrate AI algorithms into the software development and testing process

KRDecrease manual testing hours by 30%

Implement automated testing protocols for recurrent tests

Train staff in automation tools usage

Prioritize test cases for automation

KRImplement AI testing tools in 60% of ongoing projects

Procure and install AI testing tools in identified projects

Train project teams on using AI testing tools

Identify projects suitable for AI testing tool integration

Testers OKR best practices

Generally speaking, your objectives should be ambitious yet achievable, and your key results should be measurable and time-bound (using the SMART framework can be helpful). It is also recommended to list strategic initiatives under your key results, as it'll help you avoid the common mistake of listing projects in your KRs.

Here are a couple of best practices extracted from our OKR implementation guide 👇

Tip #1: Limit the number of key results

Having too many OKRs is the #1 mistake that teams make when adopting the framework. The problem with tracking too many competing goals is that it will be hard for your team to know what really matters.

We recommend having 3-4 objectives, and 3-4 key results per objective. A platform like Tability can run audits on your data to help you identify the plans that have too many goals.

Tip #2: Commit to weekly OKR check-ins

Setting good goals can be challenging, but without regular check-ins, your team will struggle to make progress. We recommend that you track your OKRs weekly to get the full benefits from the framework.

Being able to see trends for your key results will also keep yourself honest.

Tip #3: No more than 2 yellow statuses in a row

Yes, this is another tip for goal-tracking instead of goal-setting (but you'll get plenty of OKR examples above). But, once you have your goals defined, it will be your ability to keep the right sense of urgency that will make the difference.

As a rule of thumb, it's best to avoid having more than 2 yellow/at risk statuses in a row.

Make a call on the 3rd update. You should be either back on track, or off track. This sounds harsh but it's the best way to signal risks early enough to fix things.

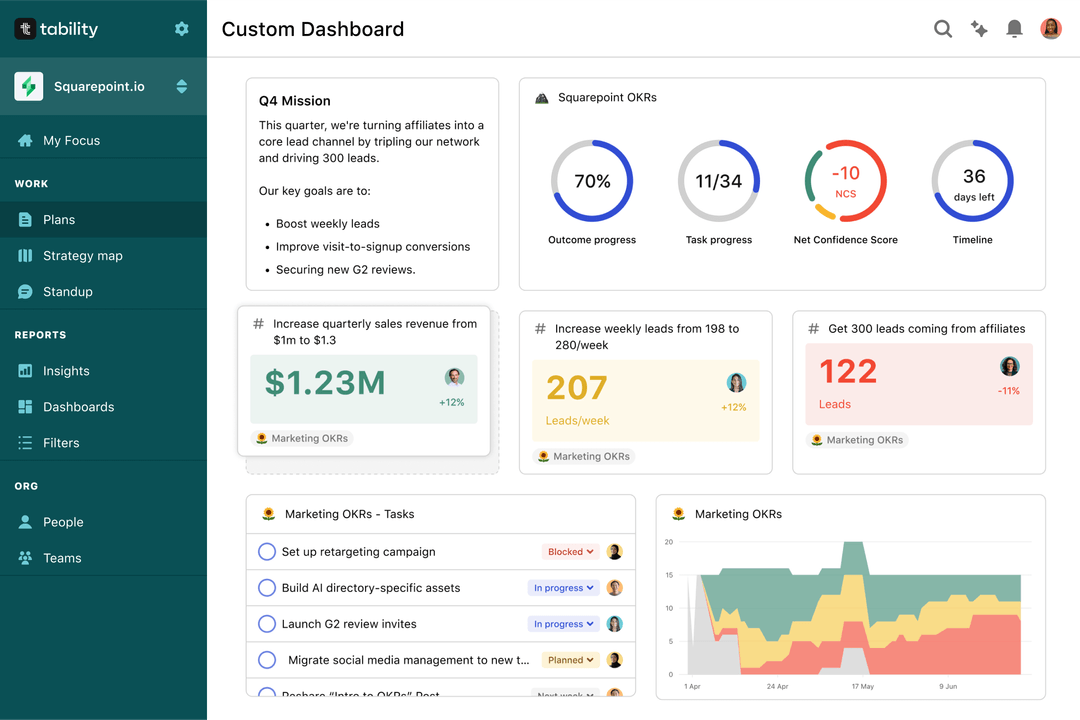

Save hours with automated Testers OKR dashboards

OKRs without regular progress updates are just KPIs. You'll need to update progress on your OKRs every week to get the full benefits from the framework. Reviewing progress periodically has several advantages:

- It brings the goals back to the top of the mind

- It will highlight poorly set OKRs

- It will surface execution risks

- It improves transparency and accountability

We recommend using a spreadsheet for your first OKRs cycle. You'll need to get familiar with the scoring and tracking first. Then, you can scale your OKRs process by using Tability to save time with automated OKR dashboards, data connectors, and actionable insights.

How to get Tability dashboards:

- 1. Create a Tability account

- 2. Use the importers to add your OKRs (works with any spreadsheet or doc)

- 3. Publish your OKR plan

That's it! Tability will instantly get access to 10+ dashboards to monitor progress, visualise trends, and identify risks early.

More Testers OKR templates

We have more templates to help you draft your team goals and OKRs.

OKRs to improve accuracy of financial statement reporting

OKRs to streamline publication of support agent knowledge articles

OKRs to improve efficiency in team support

OKRs to generate 22 NBS marketing opportunities during live events

OKRs to increase lead generation from organic and paid social media outlets

OKRs to enhance customer experience satisfaction